In this tutorial you will learn how to create a Web scraper python project and learn to use requests and Beautiful Soup library.

Web scraping has become an essential technique for extracting data from websites, automating data collection tasks, and gathering insights for various purposes. Python, with its powerful libraries and tools, offers an efficient and flexible platform for developing web scrapers. In this article, we’ll delve into the process of building a web scraper using Python, covering key concepts, implementation steps, and best practices for creating an effective scraping project.

In this web scraping project, you use a Python requests library and BeautifulSoup library to scrape country data from a Wikipedia page and store and print the result in a JSON format.

Before jumping into code make sure you have installed requests and beauftifulsoup library by running the command below.

pip install requests beautifulsoup4

Source Code

import requests

from bs4 import BeautifulSoup

url = "https://en.wikipedia.org/wiki/List_of_countries_and_dependencies_by_population"

r = requests.get(url)

soup = BeautifulSoup(r.content)

table = soup.find("table", {"class": "wikitable sortable"})

data = []

rows = table.find_all("tr")

for row in rows[1:]:

cols = row.find_all("td")

if len(cols) > 1:

country = cols[1].text.strip()

population = cols[2].text.strip()

year = cols[3].text.strip()

data.append({"Country": country, "Population": population, "Year": year})

print(data)

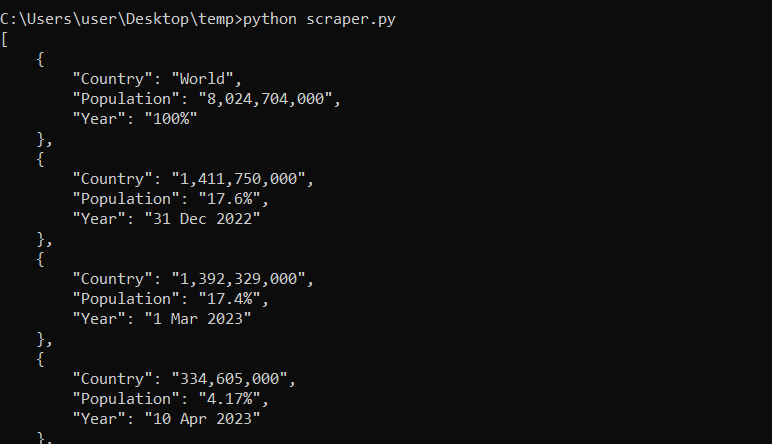

The output of Python Web Scraper

The above code shows how to do web scraping using the requests library for making HTTP requests and the BeautifulSoup library for parsing HTML content. By fetching data from a Wikipedia page that lists countries and their populations, the script demonstrates how to extract specific information from a structured table on a web page.