Using ChatGpt with Python

ChatGPT is a machine learning model created by an OpenAI which interacts in a conversational way. It uses a dialogue format to make a conversation with the interacting user, this kind of dialogue format makes it possible for ChatGPT to answer user follow-up questions admit its mistakes, challenge users’ incorrect premises, and reject user inappropriate requests.

ChatGPT is a sibling model to InstructGPT project which is created and trained by OpenAI.

ChatGPT is trained using Reinforcement Learning from Human Feedback (RLHF) on an Azure AI supercomputing infrastructure.

Get an ChatGPT Api key from OpenAI

To get an API for ChatGPT follow the steps below.

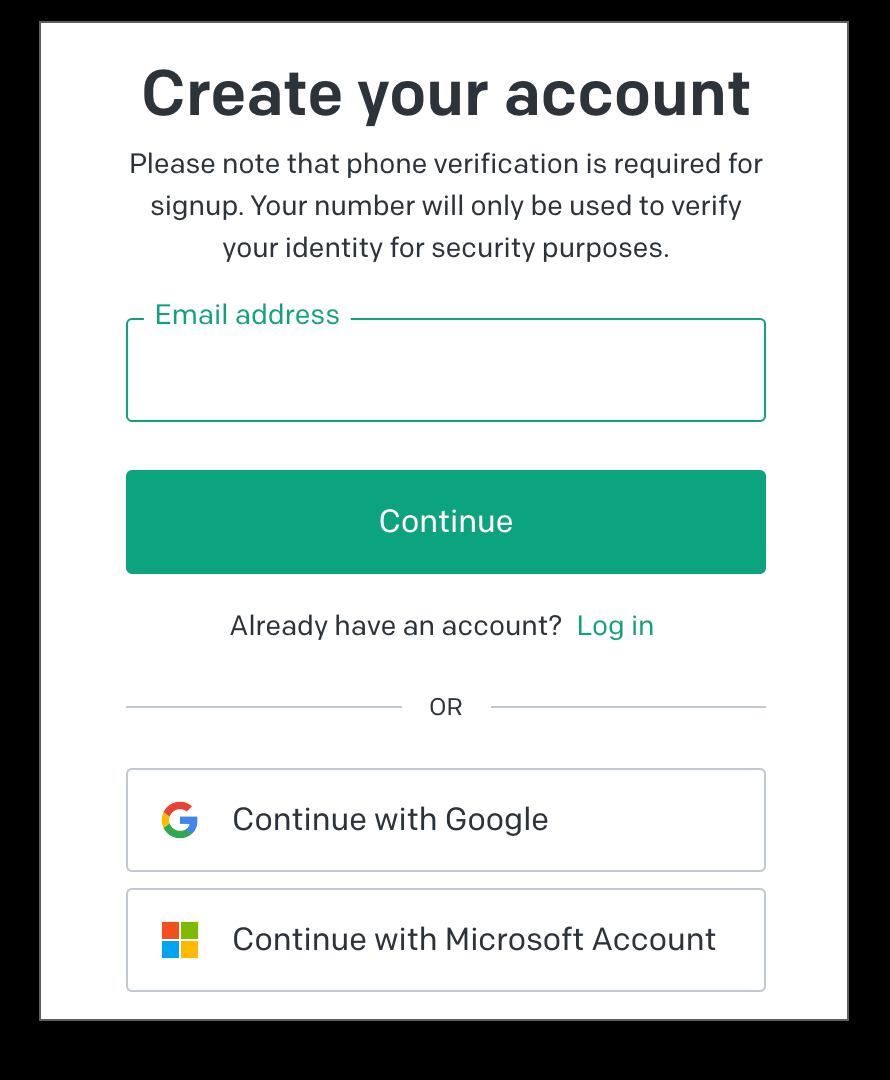

Step 1: The first step is to visit https://chat.openai.com/auth/login and select the “Sign up” option to make an OpenAI account. You have the option to either provide your email and password or sign up with your Google or Microsoft account.

Step 2: Once you have created an OpenAI account log in to the openAI

Step 3: To request permission to use the API, navigate to the API keys page https://platform.openai.com/account/api-keys and press the “Create new secret key” button. It’s crucial to store the secret key in a secure location since it won’t be accessible through your OpenAI account again. In case you lose the key, you’ll need to generate a new one.

Step 4: Once you get an API key from the OpenAi platform, use the OpenAI API python client library, a Python wrapper for OpenAI’s API. You can install it via pip by running the command “pip install openai.

Install openai

$ pip install openai

Load the openai API key in your project and specify the model, prompt, and max tokens to interact with ChatGPT using Completion Api.

import os

import openai

# Load your API key from an environment variable or secret management service

openai.api_key = os.getenv("OPENAI_API_KEY")

response = openai.Completion.create(

model="text-davinci-003",

prompt="Say this is a test",

temperature=0,

max_tokens=7

)

Here is another Python program to use ChatGPT as a conversational AI assistant.

import os

import openai

openai.api_key = os.getenv("OPENAI_API_KEY")

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=[

{

"role": "user",

"content": "Tell the world about the ChatGPT API in the style of a pirate."

}

]

)

print(completion.choices[0].message.content)

Here the message format contains a dictionary with a key role and content. The content is simply a message that you give to ChatGPT. In the case of role, there are three main roles: “system”, “user”, or “assistant”. The “user” is the one who gives the instructions and is being used in the code above.

Using System role API in ChatGPT

import openai

import json

# Set up OpenAI API key

openai.api_key = "YOUR_API_KEY"# Define prompt and parameters

prompt = "What is the meaning of life"

temperature = 0.5

max_tokens = 50

stop = "\n"# Define function to generate text with OpenAI APIdefgenerate_text(prompt, temperature, max_tokens, stop):

response = openai.Completion.create(

engine="davinci",

prompt=prompt,

temperature=temperature,

max_tokens=max_tokens,

stop=stop,

presence_penalty=0.6,

frequency_penalty=0.6,

response_format="json",

models=None,

n=None,

stream=False,

)

return response.choices[0].text.strip()

# Generate response with system role

response = generate_text(prompt, temperature, max_tokens, stop)

# Print responseprint(response)

If we run this code the output will be

prompt = "What is the meaning of life?"

temperature = 0.5

max_tokens = 50

stop = "\n"

result = generate_text(prompt, temperature, max_tokens, stop)Output is

"The meaning of life is subjective and can vary from person to person. Some might find meaning in their work, while others might find it in their relationships or hobbies. Ultimately, it is up to each individual to define their own purpose and meaning in life."In this example, we first set up the OpenAI API key and define a prompt and parameters for text generation. We then define a function generate_text that uses the Completion endpoint of the OpenAI API to generate text with the given prompt and parameters.

To use the Completion endpoint, we pass in the following parameters:

engine: This parameter indicates the name of the language model to use. In this example, we’re using the Davinci model, which is the most capable and expensive model offered by OpenAI.

prompt: the initial message or context for the response.

temperature: A value between 0 and 1 that controls the “creativity” of the response.

max_tokens: The maximum number of tokens (words or characters) in the response.

stop: The string that the OpenAI API will use to mark the end of the response.

In the above example after defining the function, we call it to generate a response with the system role, and then print the response to the console. Note that this example only generates one response; in a real-world application, you would likely use a loop or callback function to generate multiple responses and maintain a conversation with the user.

Using User role with ChatGPT Api

import openai

whileTrue:

content = input("Ask me: ")

messages.append({"role": "user", "content": content})

completion = openai.ChatCompletion.create(

model="gpt-3.5-turbo",

messages=messages

)

chat_response = completion.choices[0].message.content

print("ChatGPT: " + chat_response)

messages.append({"role": "assistant", "content": chat_response})Using Assistant role with ChatGPT Api

The assistant role is employed for retaining past responses. This helps to create a record of the conversation that can be useful for referring to previous messages in case user instructions require it.

To incorporate the assistant role into our list of messages, we can use the code provided below.

import openai

import json

# Set up OpenAI API key

openai.api_key = "YOUR_API_KEY"# Set up prompt and parameters

prompt = "Hello, how can I assist you today?"

temperature = 0.5

max_tokens = 50

stop = "\n"# Define function to generate text with OpenAI APIdefgenerate_text(prompt, temperature, max_tokens, stop):

response = openai.Completion.create(

engine="text-davinci-003",

prompt=prompt,

temperature=temperature,

max_tokens=max_tokens,

stop=stop,

presence_penalty=0.6,

frequency_penalty=0.6,

response_format="json",

models=None,

n=None,

stream=False,

)

return response.choices[0].text.strip()

# Start conversationprint(generate_text(prompt, temperature, max_tokens, stop))

whileTrue:

# Get user input

user_input = input("> ")

# Add user input to assistant role

prompt += f"\nUser: {user_input}\nAssistant:"# Generate response

response = generate_text(prompt, temperature, max_tokens, stop)

# Add response to assistant role

prompt += f" {response}\nUser:"print(response)

The above program interacts with the OpenAI API to generate text in the assistant role. The program uses the openai library to access the API and sets up a prompt and parameters for text generation.

Inside a while loop, the program prompts the user for input, generates a response using the OpenAI API, adds the user input and response to the prompt with appropriate prefixes, and then prints the response to the console. This process repeats until the program is terminated.

Notable Features of ChatGPT:

- ChatGPT Can write and debug computer programs

- ChatGPT can mimic the style of celebrity CEOs and writes business pitches

- ChatGPT can Compose music, teleplays, fairy tales, and student essays

- ChatGPT can Answers test questions, sometimes at a level above the average human test-taker

- ChatGPT can Write poetry and song lyrics

- ChatGPT can Emulates a Linux system

- ChatGPT can Simulate an entire chat room

- ChatGPT can Plays games like tic-tac-toe

- ChatGPT can Simulate an ATM

Limitations of ChatGPT

- In comparison to its predecessor InstructGPT, ChatGPT attempts to reduce harmful and deceitful responses.

- Potentially offensive prompts are dismissed, and queries are filtered through the OpenAI Moderation endpoint” API.

- Journalists have speculated that it could be used as a personalized therapist, but this has not been fully tested.

- While it can remember previous prompts given to it in the same conversation, it still has limitations in terms of memory and contextual understanding.

Recent Updates on ChatGPT

- OpenAI announced it would be adding support for plugins for ChatGPT, including both plugins made by – OpenAI and external plugins from developers such as Expedia, OpenTable, Z